How I taught my robot to realize how bad it was at holding things by Ugo Cupcic

Dec 22, 2017

As the Chief Technical Architect of the Shadow Robot Company, I spend a lot of time thinking about grasping things with our robots. This story is a quick delve into the world of grasp robustness prediction using machine learning.

First of all, why focus on this? There are currently much more exciting projects using deep learning for robotics. For example, the work done by Ken Goldberg and his team at UC Berkeley on DexNet is very impressive. They manage to reliably grasp 99% of their test set using deep learning. But when we work on delivering a “Hand that Grasps” as a product, we have to focus on delivering smaller robust iterations first. Being able to predict whether a grasp is stable or not, dynamically, is an interesting topic for the industry. For example, if you can determine that a grasp has a high chance of failing before it actually does, you can save a lot of time by re-grasping.

The goal isn’t to give you the best solution for grasp quality using machine learning, but rather to give a gentle introduction to using machine learning in robotics for a directly useful purpose which is hard to solve with existing standard algorithms.

If you want to skip the explanations and get your hands dirty with the open source dataset and code, head on over to Kaggle.

Gathering the dataset

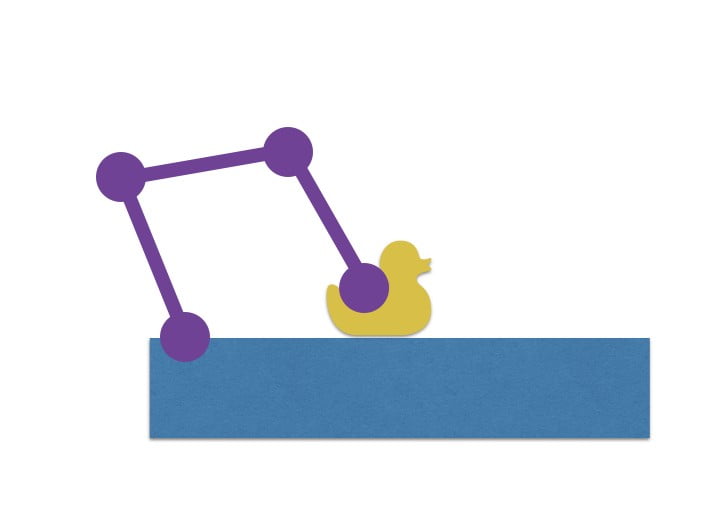

Using the Smart Grasping Sandbox, we gathered a large dataset for our purpose. Since our goal is to classify whether a grasp is stable or not, we need to gather a dataset containing both stable and unstable grasps. We also needed to quantify the stability of a grasp automatically in order to annotate our data easily — instead of annotating it manually.

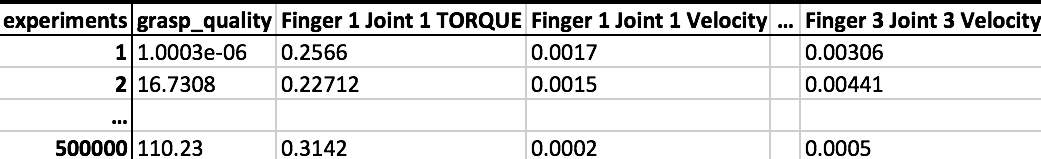

Which data should we record? We get plenty of data from the simulation. To simplify things, we’ll be looking at the state of the joints only. This state contains — for each joint — the position, the torque and the velocity. Since we want a grasp quality that’s object-agnostic, we won’t be using the joint position: the shape of the hand is purely object specific. So we’ll be recording each joint velocity and torque.

Our dataset will look like this:

An objective grasp stability measurement

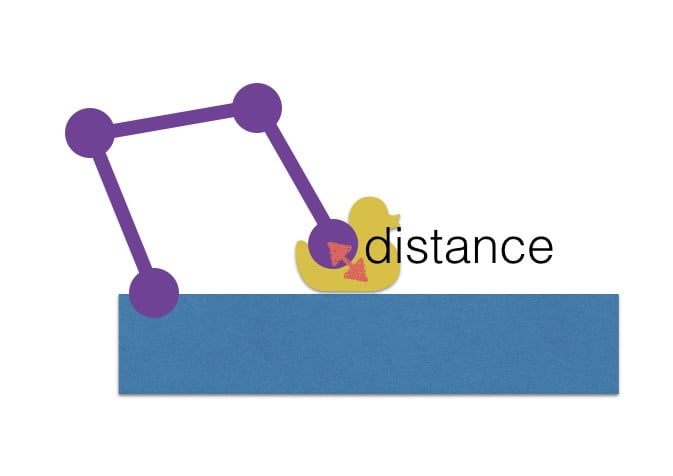

In simulation, there’s an easy way to check whether a grasp is stable or not. Once the object is grasped — if the grasp is stable — then the object shouldn’t move in the hand. This means that the distance between the object and the palm shouldn’t change when shaking the object. Lucky for us, this measure is very easy to get in simulation!

Let’s record some data

Now that we know what we’re doing, we’ll be using the Sandbox to record a large dataset. You can have a look at the code I use to do this over here. Since the Sandbox is running on Docker, it’s very easy to spawn multiple instances in parallel on a server and let them run in parallel for a while.

Since I don’t trust simulations to run for too long — call it a strong belief based on personal experience, same as the demo effect — I also only run 100 grasp iterations before restarting the Docker container with a pristine environment.

In order to get a relevant dataset, I randomise the grasp pose around a good grasp — which I found empirically: we want enough bad grasps in there. I’m also using different approach distances. This gives me — roughly — a 50/50 ratio of stable and unstable grasps — with plenty of grasps in between.

For your convenience, I’ve made this dataset public and you can find it on Kaggle.

Let’s learn!

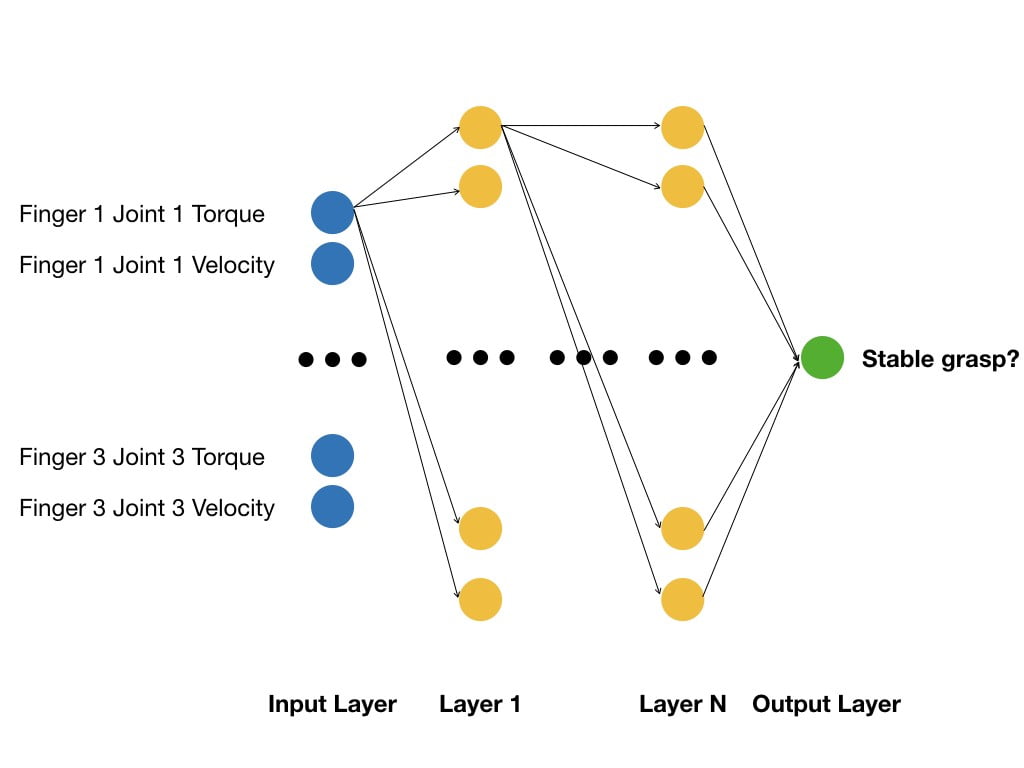

Now that we have gathered a good enough learning set, we want to teach a Neural Net in order to predict whether a grasp is stable or not, based on the current joint state.

What’s a Neural Net?

With all the current hype around Deep Learning, it’s easy to imagine a computer with a brain learning new things auto-magically. Let’s demystify the Neural Net quickly.

As shown above, a Neural Net takes as an input a vector — in our case the torque and velocity for each fingers. Then this vector is transformed a few times — as many times as there are layers — and a final vector is the output of the Neural Net: the classification of the data that was given. In our case the output we want is whether the grasp is robust or not — so it’s a vector of size 1.

During the learning process, we will feed the network values we’ve gathered in the dataset. Since we know whether those joint values are for stable or unstable grasps — our dataset is annotated — the training process adjusts the parameters of the different transitions between the layers.

The art of machine learning consists of choosing the network topography — how many layers and neurones, plus which transition functions to use in our network — as well as gathering a good dataset. If we have all this, then we can train a network that will generalise well to cases that haven’t been seen during the training.

Training the network

The goal of this exercise isn’t to create the perfect grasp quality prediction algorithm, but rather to simply show how it’s possible to use the smart grasping sandbox for machine learning. I chose a very simple topology for the network: I’m using one single hidden layer between the input and output layer. For more details, refer to the iPython notebook.

For simplicity’s sake, I’m using the excellent Keras library. If you can’t wait and want to see the actual code, go to Kaggle. Otherwise, read on!

After loading the dataset in memory, I split it between the training set and the test set. Validation will be run on part of the training set, and I’ll use the test set to see whether it generalizes well.

Since my network is small, training is relatively fast, even on my laptop. When I train deeper networks, I spawn the docker image on a beefy machine in the cloud, using NVidia’s GPUs to speed up training.

After training my network, I get an accuracy of 78.87%.

Testing my trained network on live data

Now that we trained our Neural Net we can use it to predict the grasp quality in real time on the simulation. As you can see in the video below the prediction is working nicely most of the time.

As you can see in this video, the live prediction of the grasp — the blue plot on the left — is higher than 0.5 when grasping the ball the first time. This results in a very stable grasp. On the contrary, during the second grasp, the metrics stays under 0.2, rightfully predicting that the grasp will fail.

Final words

I hope this story peeked your interest. If you want to try to train your own algorithm on this dataset, the easiest thing to do is to go to Kaggle.com where it’s already set up for you.

Obviously, there’s much more that should be done to deploy this method in production. The first thing to tackle is to have a better simulation in order to pick up a wide variety of objects. I’ll also be looking at having a live objective grasp quality — the one that’s used to annotate our dataset — in order to be able to use time-series prediction instead of one shot prediction. And the final challenge will be transferring that learning to the real robot.

There are so many interesting topics to explore machine learning with in robotics: grasp quality, slippage detection, amongst others.

I hope that you can’t wait to test your ideas on the sandbox. If you do, let me know on Twitter!