Simulating the Shadow Hand

Aug 1, 2023

Running a physical robot carries costs and risks – so why not make life easier by getting things working in simulation first?

Don’t get us wrong – we love physical robots. Making things happen in the real world is why we build them, after all. But there’s a place for simulations, too! You might want to collect some early-stage results to justify a larger proposal with the budget for more robots in it. You might want to test something on hardware you don’t have before getting new hardware. You might want to exploit the power of GPU computation to run an algorithm at scale in virtual worlds. You might be experimenting with simulation-in-the-loop algorithms that need a model to work with. Or you might want to do some algorithmic experimentation that you are sure will be cruel to the physical robots until you get it right.

We’re not here to judge you – just to help you play with our systems and have fun doing so. In that vein, let’s see how we can support you to simulate the Shadow Hand.

There are three simulation tools we can recommend. For each of them, I’m going to explain the basics of how to get started. I’m assuming you are as happy as I am to ReadTheFineManual once you’ve got something running 🙂

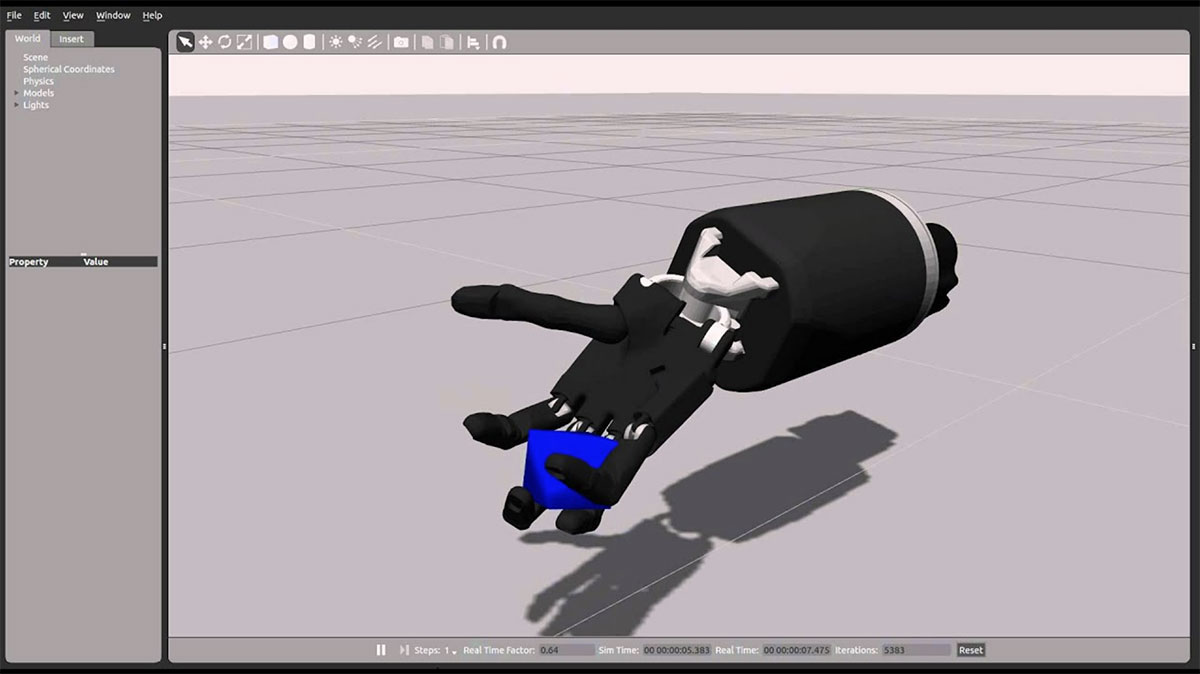

Gazebo

Gazebo is an open-source simulator that is widely used in the robotics community. It offers a variety of features such as physics simulation, sensor simulation, and visualization tools.

It is a powerful 3D robotics simulator that allows you to simulate complex scenarios and test algorithms in a virtual environment. Gazebo supports a wide range of sensors, including cameras, lidars, and sonars, and allows you to visualize and analyze the sensor data in real time. It also supports a variety of robot models and can simulate a wide range of robot behaviours, making it a versatile tool for robotics research and development.

Simulating Shadow Hand in Gazebo

First is the default Gazebo package within ROS. Our online docs (here) tell you how to get the system installed on your computer so you can run the Shadow Hand in simulation. This is a Docker install on Ubuntu – pro tip – if you haven’t got Docker installed on your computer, you’ll need to run the install one-liner twice, with a reboot in between.

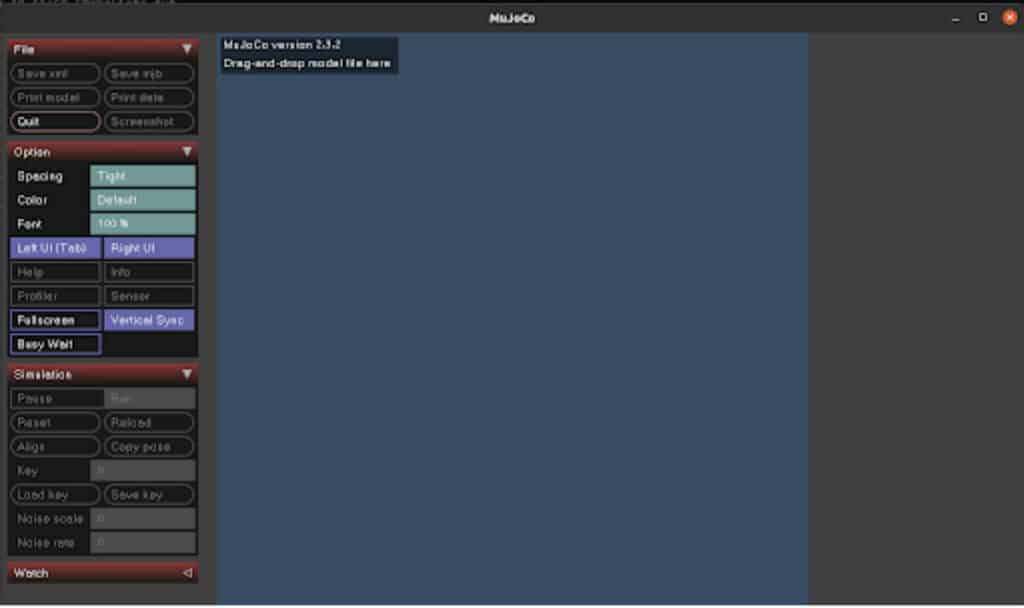

MuJoCo

MuJoCo (Multi-Joint dynamics with Contact) is a high-quality physics engine for simulating rigid-body systems and soft-body deformations. It is known for its fast and accurate simulation of both kinematics and dynamics, which makes it well-suited for real-time control and visualization. It provides a comprehensive set of APIs in C, Python, MATLAB, and other programming languages. In fact, it’s so good that DeepMind bought it and open-sourced it! (Thanks!)

Simulating Shadow Hand in MuJoCo

The model of the Shadow Hand here is maintained as part of DeepMind’s Menagerie:

https://github.com/deepmind/mujoco_menagerie/tree/main/shadow_hand

To install it and get it running look here:

https://github.com/deepmind/mujoco_menagerie#prerequisites.

Then when you start MuJoCo you will get a screen like this:

And from the menagerie, you can drag in left_hand.xml or right_hand.xml to get this:

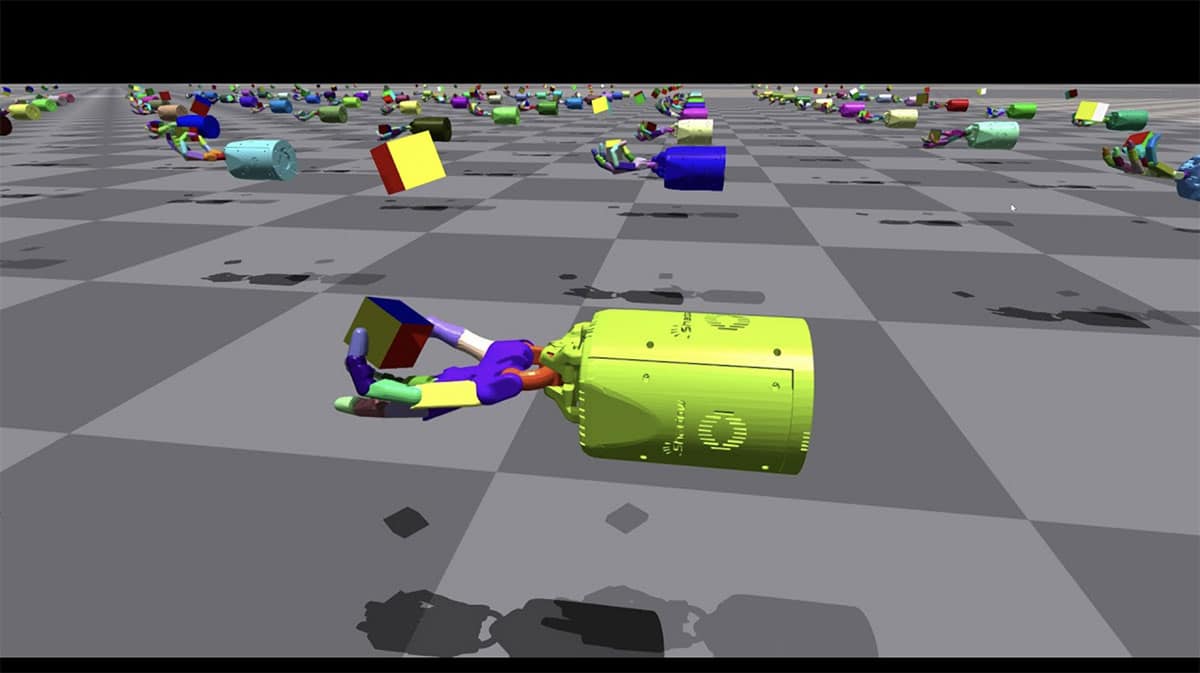

NVIDIA Isaac Sim

NVIDIA Omniverse™ Isaac Sim is a robotics simulation toolkit for the NVIDIA Omniverse™ platform. Isaac Sim has essential features for building virtual robotic worlds and experiments. It provides researchers and practitioners with the tools and workflows they need to create robust, physically accurate simulations and synthetic datasets. Isaac Sim supports navigation and manipulation applications through ROS/ROS2. It simulates sensor data from sensors such as RGB-D, Lidar, and IMU for various computer vision techniques such as domain randomization, ground-truth labelling, segmentation, and bounding boxes.

This allows you to run machine learning at scale.

Simulating Shadow Hand in Nvidia Isaac

(You really want to have an Nvidia GPU in your computer at this point, as this allows you to run machine learning at scale!)

Install Omniverse from here:

https://docs.omniverse.nvidia.com/app_isaacsim/app_isaacsim/install_workstation.html

Then the simulator can be installed following these instructions:

https://github.com/NVIDIA-Omniverse/OmniIsaacGymEnvs#installation

Once you’ve managed to run one of the examples in the readme (for example, “Cartpole” from this section: https://github.com/NVIDIA-Omniverse/OmniIsaacGymEnvs#running-the-examples ), you can specify “ShadowHand” as the task to start learning with the Shadow Hand model. For example:

PYTHON_PATH scripts/rlgames_train.py task=ShadowHand

To wrap it up

There are three different ways to get a simulation going of the Shadow Hand.

Once you’re happy with the results, why not let us know what you’ve managed to do?

Share your simulations with us by either reaching out to marketing@shadowrobot.com or by tagging us on our socials. We’d love to see what you’re up to!