Getting Data from Humans to Robots

Aug 24, 2023

We can all agree that humans excel at manual tasks, and that ideally, robots should surpass us in these functions. At times, we have the advantage due to our superior dexterity, while in other instances, we can perform tasks that robots should, in theory, be capable of.

As we advance robotics our goal is to effectively transfer our human skills to robots.

Let’s explore some methods on how to achieve this.

First and foremost, there exists the “Straightforward Programming” approach. This means: getting a skilled person to meticulously describe a task, to such an extent that one can write either a simple script or an intricate algorithm that can guide the robot to do the task. This method is great and highly effective when the task is well-defined and specific, something often done in industrial automation. However, the complexity can escalate rapidly, making it challenging. Thus, if the approach is successful, that’s excellent – but if not, we must ponder – what alternative strategies can we employ?

Secondly, an alternative approach to consider is “Programming by Demonstration“. This method involves guiding the robot in performing the task. By equipping the robot with advanced force sensing capabilities and vision algorithms, this technique can prove to be quite effective. However, it’s crucial to note that its effectiveness is reliant on the robot’s abilities. Usually, robots are equipped with rudimentary grippers rather than adept, dexterous hands, which can limit the complexity of tasks they can perform.

Okay, so how can we effectively record human actions for a robot to mimic?

Ideally, we’d guide a human through the task, document it in detail, and then replay it to the robot. We might even consider feeding this information into a machine-learning system, enabling it to learn from our human-guided demonstration. Alternatively, at the very least, we can analyze these human activities, deconstruct them into simpler parts, and use this information to develop more efficient algorithms in the way that a song is deconstructed in a recording studio.

OK, so how about, filming the person doing the task and using “machine vision” to track what they are doing?

It’s certainly a good starting point. This approach provides valuable insights into the nuances of human behaviour – such as hand-switching moments, object-holding techniques, and tool usage – But our experience is that it’s really hard to track human movement using cameras. You need clear lines of sight, and you’ll have lots of trouble tracking the fine movements of the fingers and hands. Even the most advanced image-based tracking systems, while capable of monitoring hands with minimal occlusion, fail to consistently track all joint movements. Consequently, the resultant data tends to be somewhat inconsistent and noisy.

Alright, what about using motion capture?

Motion capture systems, commonly employed in film production and sports science, operate by attaching easily visible markers to the individual, such as passive elements like white dots or active elements like pulsed LEDs. This method certainly helps with the accuracy of tracking. However, it doesn’t entirely overcome the previously mentioned issues related to occlusion. Additionally, calibrating the optical systems to an error margin of less than a few millimetres is quite challenging – which means that if the task involves intricate movements, such as rolling a pen between fingers, it’s likely it’ll miss a lot.

Okay. I need it to be accurate, what’s my best option then?

For precise human movement tracking, your best bet is to use a tight-fitting movement-tracking glove. This glove can incorporate a multitude of techniques to achieve such a feat, some even providing additional features to assist the operator (and some that make it more difficult for the operator). For instance, some gloves can relay a sense of weight, touch, or stiffness, all functionalities grouped under the term ‘haptics.’ The realm of haptics is broad, including diverse technologies from ultrasound devices transmitting signals to fingers, electrical skin stimulation, vibrating piezoelectric elements, to inflating air balloons, miniature motors, or linear actuators, among many others.

Gloves that measure human hand movement can do it by utilising:

- Sensors that fit around the joints and track the specific movement of the joint

- Mechanical linkages that go from one end of the finger to the other and track the whole movement of the finger

- Inertial sensors (that clever combination of accelerometer, gyroscope and possibly magnetometer) worn at various points on the hand to follow the movement

- Wireless measuring sensors detecting the location of the fingers relative to a source worn on the hand somewhere.

- Cameras that look away from the hand and track the movement of the world. (I’m not sure I’ve seen this one used in anger but I’m sure it’s possible!)

Even then several variations of these technologies exist. For instance, some sensors measure the flex of a glove, correlating it to joint movement. Other types of sensors stretch and track their own deformation to gauge distance. Occasionally, mechanical linkages are placed over individual joints for precise motion tracking.

Each of these methods presents a unique approach to capturing and interpreting human movement

Our experience:

From our extensive experience, we have found that accurately measuring the mechanical properties of the human hand can be challenging, as it often leads to significant inaccuracies. The placement of the measurement sensor is crucial, and unfortunately, the potential for slippage is high – gloves and similar apparatus tend to shift excessively, complicating the data collection. Relating the measurements to the actual rotation of a finger joint’s axis, especially the thumb, is another daunting task!

Mechanical linkages, although valuable, can quickly become cumbersome. There is also a risk that they could discomfort the operator to the point where task completion becomes impossible (too heavy, too rigid etc). Inertial sensors present a viable option; however, (once again) preventing them from drifting is generally difficult, and the precision level they offer often falls short of what we need

We have found that wireless measurement sensors, particularly those from Polhemus, give us excellent results. These sensors entail a transmitting source worn on the palm and receivers located at the fingertips, providing us with high-quality data for hand movement tracking. However, there remains the challenge of locating the hand within the wider three-dimensional space. For this, we have found that the HTC Vive is an excellent solution and so you will often see the Vive tracker featured in our demonstrations.

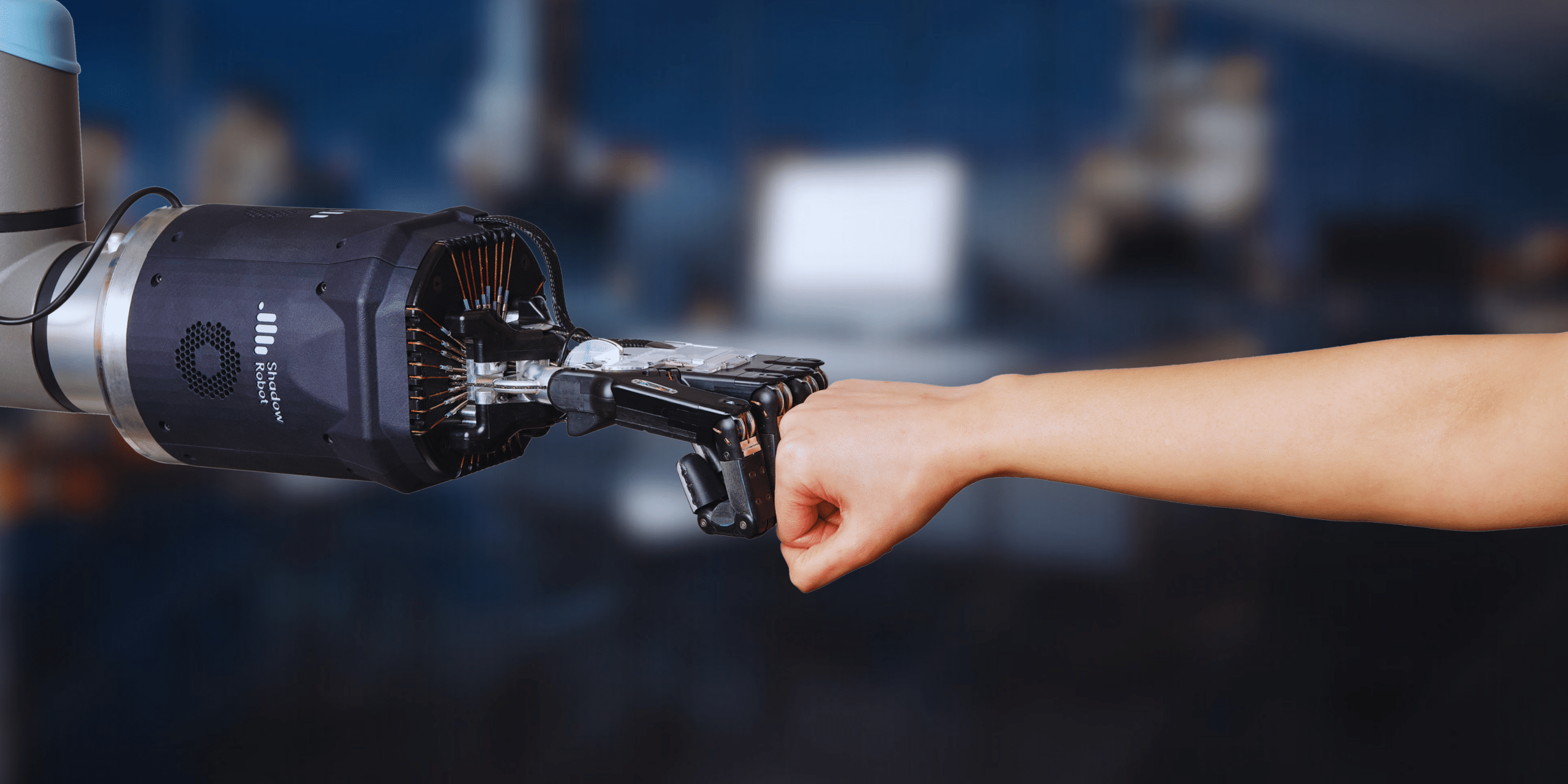

Now – what we want is to accurately know where to put the robot hand – which isn’t quite the same thing as knowing accurately where the human hand is. We have to take the data from the human hand and map it to the robot hand. This is a kinematic challenge – the movements of the human and the movements of the robot don’t agree entirely, and we need to convert one to the other. The best method for mapping human data to a robot hand will depend on the specific application. For example, if you need to control a robot’s hand in real time, then direct mapping may be the best option. If you are only interested in controlling a robot’s hand for offline analysis, then inverse kinematics or hybrid mapping may be more feasible options. Needless to say, the more complex the robot the more challenging this becomes. Lucky for us complex robots are a speciality at Shadow!

So, the human wears a set of gloves, and they control the robot to do the task. After completing a few rudimentary training tasks the operator gets the hang of the process and they are doing it pretty well. What do we do to learn from this?

We can precisely monitor the instructions sent to the robot and reproduce them. This is ideal when operating in a controlled environment where all variables remain consistent – a scenario commonly found in an automation setting within a quality assurance laboratory. In the past, we employed this technique to build a functional robotic kitchen. While it allows you to execute repetitive tasks, it lacks the ability to cope with variation.

We can capture and analyze data from numerous tests, assessing them for consistency. If the results are largely similar, they can be directly applied. However, if they vary a lot, we can delve into our algorithm toolbox to try and understand these discrepancies. This is where it helps to have a wide range of data from the robot, from force, touch, and joint sensors. The question arises: Can we employ data from vision, such as cameras aimed at the work area, to discern what’s different? To be effective, this vision data needs to be synchronized with the robot sensor data, necessitating a shared framework for all data streams.

We can gather data from multiple tests and use it by “Feeding it to the AI” – meaning use it to instruct a machine learning system on how to replicate similar movements from similar data.

This can be done in several ways.

We might train a traditional learning model on the datasets so that similar inputs generate similar outputs. Alternatively, if we have enough data, we could use a transformer model to generate movements. We can even break down the data into smaller segments and train a network to assemble these fragments into the correct sequences.

Given the impressive capabilities of modern machine learning systems in generating text, images, video, and even code, this approach appears highly promising – and it’s only the beginning!

So, does this sound like it could help with a problem that you face? If it does, then let’s have a chat to see if the Shadow Robot team can help!